The

main advantage DDR2 has over good ole DDR memory is that it runs on a lower

voltage, which lowers the power requirements, and allows it to scale higher with a small

latency penalty.

The

main advantage DDR2 has over good ole DDR memory is that it runs on a lower

voltage, which lowers the power requirements, and allows it to scale higher with a small

latency penalty.

GDDR3

(Graphics Double Data Rate3) takes this one step further, requiring less

voltage than DDR2, and scaling even futher (though with some

latency penalty). While the motherboard industry is making the transition from

DDR to DDR2 memory, right now GDDR3 is only used on graphics cards. There are no

current plans to migrate GDDR3 to the motherboard level - but who knows what the

situation will be a year or two

down the road. GDDR3 and DDR2 do share one thing in common, they are

only packaged in BGA modules - leaving the old TSOP-II to be finally

retired.

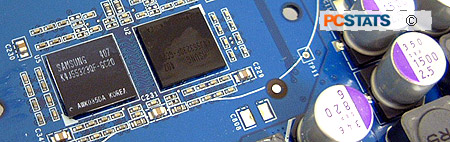

Micron were first out of the gate with GDDR3 DRAM modules, but the memory found on

the Albatron GeForceFX 5700 Ultra is actually using Samsung K4J55323QF-GC20 GDDR3 DRAM. Compared to the Samsung K4N26323AE-GC22 DDR2 DRAM modules we find

on earlier cards, the Samsung GDDR3 memory requires just 2.0V compared

to 2.5V for DDR2. This which should help ease up on power consumption.

However, as GDDR3 RAM

runs with an even higher latency than DDR2, nVIDIA have had to clock the memory 50

MHz higher as well. This should offset any performance penalty that GDDR3 has

over DDR2.

Overclocking the FX5700U3!

As usual we started with the core overclocking first; from

450 MHz we slowly went up a few MHz at a time. The core was a pretty good

overclocker and the FX5700U3 went all the way up to 571 MHz before it started to have

any problems. Anything higher though, and the computer would lock up when running any 3D

application.

As usual we started with the core overclocking first; from

450 MHz we slowly went up a few MHz at a time. The core was a pretty good

overclocker and the FX5700U3 went all the way up to 571 MHz before it started to have

any problems. Anything higher though, and the computer would lock up when running any 3D

application.

Since

this is the

first time we've dealt with GDDR3 we really don't know what to expect from the

memory... but with 2ns DRAM we were hoping to hit some nice speeds. By default the

memory is clocked at 950 MHz, 50 MHz higher than regular GeForceFX 5700 Ultra

DDR2 based videocards. This is done to make up for a small latency

penalty.

Not

surprisingly we were able to crack the 1 GHz mark quite easily, but we were not

able to go much higher than that. The maximum speed the FX5700U3 memory reached

1.02 GHz - if we tried to go higher we'd be greeted by artifacts like in

the Raiders of the Lost Arc... you remember the big artifacts that spit out poison tipped

darts, gigantic rock bowling balls, and all that fun stuff? Same thing here. :-)

|

| PCStats Test System Specs: |

| processor: |

intel pentium 4 3.0c |

| clock

speed: |

15 x 200 mhz = 3.0

ghz |

| motherboards: |

gigabyte 8knxp, i875p |

| videocard: |

ati radeon 9800xt

ati radeon 9800 pro

ati

radeon 9700 pro

asus radeon 9600xt

msi fx5950 ultra-td128

msi fx5900u-vtd256

msi

fx5900xt-vtd128

gigabyte gv-nv57u128d

albatron geforcefx 5700 u3 |

| memory: |

2x 256mb corsair twinx

3200ll |

| hard drive: |

40gb wd special

ed |

| cdrom: |

nec 52x cd-rom |

| powersupply: |

vantec stealth 470w |

| software setup |

windowsxp build 2600

intel inf 5.02.1012

catalyst 3.9

detonator

53.03 |

| workstation benchmarks |

3dmark2001se

codecreatures

aquamark

aquamark3

gun metal 2

x2 the

threat

ut2003

aa test, af and

aa+af test

3dmark2001se

x2 the threat

ut2003 | |

By

combining DirectX8 support with completely new graphics, it continues to provide

good overall system benchmarks. 3DMark2001SE has been created in cooperation

with the major 3D accelerator and processor manufacturers to provide a reliable

set of diagnostic tools. The suite demonstrates 3D gaming performance by using

real-world gaming technology to test a

system's true performance abilities. Tests include: DirectX8 Vertex Shaders,

Pixel Shaders and Point Sprites, DOT3 and Environment Mapped Bump Mapping,

support for Full Scene Anti-aliasing and Texture Compression and two game tests

using Ipion real-time physics.

Higher

numbers denote better performance.

It

shouldn't be any surprise that the Albatron GeForceFX 5700U3 performs the same as

the Gigabyte GeForceFX 5700 Ultra. Overclocking boosts the score a bit.