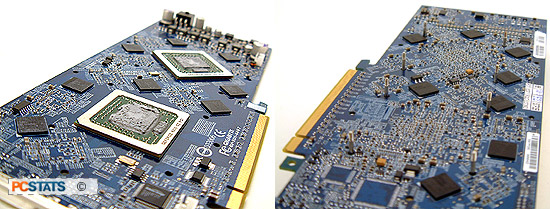

Built on IBM's 0.13 micron manufacturing process and weighing in at 222 million transistors, a single nVidia GeForce 6800GT core has almost as many transistors as an AMD Athlon64 X2 (233 million) or Intel Pentium D (230 million) processor. Team that up with a second core, some high speed Samsung 1.6ns GDDR3 memory, and an onboard power supply and you've got yourself a very complex videocard.

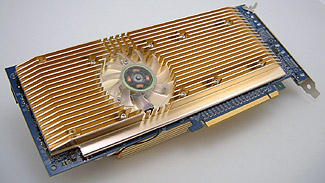

To keep temperatures operating at an acceptable range, Gigabyte cools the GeForce 6800GT GPUs and memory on the front of the videocard with two massive aluminum heatsinks. The heatsinks share a large 50mm fan which uses the same air to also cool down the GV-3D1-68GT's MOSFETs as well. The large heatsink on the front stands three centimeters tall so the expansion slot directly below the PCI Express x16 slot will be blocked, and most likely will the next as well.

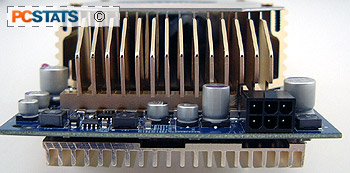

On the back of the Gigabyte GV-3D1-68GT is a large aluminum heatsink that cools not just 256MB of memory, but also PCB directly behind each GeForce 6800GT core. On the back there is a 10 x 60mm fan which keeps the rear of the videocard cool. Between the memory/PCB are thick thermal pads which help transfer heat to the heatsinks.

On the back of the Gigabyte GV-3D1-68GT is a large aluminum heatsink that cools not just 256MB of memory, but also PCB directly behind each GeForce 6800GT core. On the back there is a 10 x 60mm fan which keeps the rear of the videocard cool. Between the memory/PCB are thick thermal pads which help transfer heat to the heatsinks.

The heatsink on the back of the GV-3D1-68GT stands ~1.5 cm in height. Any Northbridge heatsink which are closer to the PCI Express x16 slot than that, and there will be clearance problems.

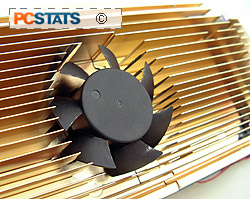

The 50mm fan on the front of the Gigabyte GV-3D1-68GT spins at a high rate of speed and generates quite a bit of noise. Even in an enclosed case the 50mm fan noise will be noticeable. For the gaming enthusiast this will most likely not be a problem; performance is always number one, but regular gamers may find it a real turn off.

Gigabyte SLI Motherboard-only Compatibility

Gigabyte SLI Motherboard-only Compatibility

While the Gigabyte GV-3D1-68GT is physically compatible

with all PCI Express x16 motherboards on the market, it will only operate

on non-Gigabyte boards in single GPU mode. There are a few reasons behind this,

first only Gigabyte nForce4 SLI motherboards know how to divvy up the PCI

Express x16 bus properly to each GeForce 6800GT core.

Recent Gigabyte nForce4 SLI motherboards also comes with special selector switches that are designed to divide the PCI Express x16 bus between the two GeForce 6800GT cores, rather than divide the bus via two physical PCI Express x16 slots. For the purposes of this review, PCSTATS will be testing the GV-3D1-68GT with the Gigabyte GA-K8N Ultra-SLI motherboard.

Next while most motherboards implement SLI switching physically similar to the way Gigabyte does things, it would require the cooperation from rival manufacturers. Why would these companies allow for GV-3D1-68GT support if it can just do the same thing? Asus has followed suit and have developed a couple of single PCB SLI videocards, although none have reached the retail market yet.

If you are

wondering, why doesn't Gigabyte simply develop a PCI Express x16 buffer splitter the answer might be due to restrictions imposed by nVIDIA.

If you are

wondering, why doesn't Gigabyte simply develop a PCI Express x16 buffer splitter the answer might be due to restrictions imposed by nVIDIA.

For SLI to be

supported, the Forceware drivers must first detect a nForce4 SLI chipset. Even if compatible cards

are present in PCI Express x8 x8 configuration, SLI cannot be done without driver

modification. Albatron was able to get SLI working on a 915PL

based motherboard however it requires Albatron modded drivers for SLI to work.

It's hard to tell for sure what the situation is because nVIDIA states that it only approves 'platforms' not hardware. But if the assumptions we've taken are correct, nVidia must change its policy towards SLI before videocards like the Gigabyte GV-3D1-68GT can be really successful on a mass-market level.