Before we get into heavy technical details, let's get

the "oooh" and "aaaah" specs on the new GeForce 8800GTX 'G80' graphics core

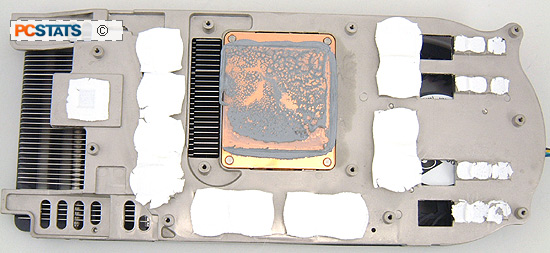

out of the way. The nVidia 'G80" CPU is built on TSMC's 90 nanometer

manufacturing process, and it contains... get this... 681 million transistors. The Geforce 7800GTX 'G70' had 302 million transistors, and by way of comparison the ATI Radeon X1900XTX has 384 million transistors. In its current form the G80 demands a lot of power, and generates a huge amount of heat; upwards of 80W-85W under load. This is why the reference design requires such a large heatsink.

nVIDIA's 'G80' is the first DirectX 10 compatible GPU on the market, and it

is completely different from videocards of the past. The 'G80' utilizes a

unified architecture, which is another way of saying that it merges vertex and

pixel shaders into one floating point processor. There have been other GPUs to

take this route, notably the ATi graphics processor inside the Xbox 360, but

nVIDIA is first to release this type of technology for the home PC.

Looking at the nVIDIA block diagram of the G80 we can see there are eight "Thread Processors", each with 16 Stream Processors (SP) for a total 128. Gone are the hard coded Vertex and pixel shaders we're used to seeing, they have been replaced with the more flexible Stream Processor that calculates both types of data. The Stream Processors run at a blistering 1.35 GHz. Traditional core clock speeds as we know it are dead, as several internal processors are running at different speeds.

Each Thread Processor has two groups of eight SP, and each group talks to an exclusive texture address filter unit as well as well as being connected to the shared L1 cache. When more memory is needed the Thread Processor connects to the crossbar memory controller. nVIDIA's crossbar memory controller is broken up into six 64 bit chunks, which means the width is essentially 384 bits wide. That is 50% larger than previous versions.

By moving the GPU towards a threaded design, the nvidia G80 is much more like a processor than any graphics cores of the past. Any type of data, be it pixel, vertex, or geometry shader can be processed within the SP. This allows load balancing to occur between the various tasks.

By moving the GPU towards a threaded design, the nvidia G80 is much more like a processor than any graphics cores of the past. Any type of data, be it pixel, vertex, or geometry shader can be processed within the SP. This allows load balancing to occur between the various tasks.

Although DirectX 9 does not support unified shader instructions, load

balancing is handled automatically by the GPU so it's not something developers

have to worry about. Load balancing ensures optimal performance from the GPU no

matter what the situation, as pixel and vertex processing shifts greatly during

game play.

While there are 128 Stream Processors broken down into

16 8-section chunks, there are only 32 texturing units total, 4 per thread

processor. The texturing units run at core clock speed, and as indicated can

handle 32 textures per clock. One of the most important "features" is that the

texture unit operates independently of the SP, so texturing can occur at the same

time as shader rendering.

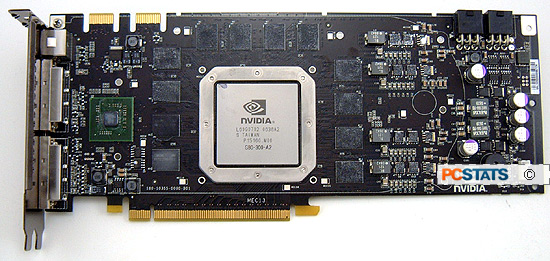

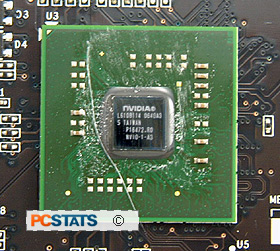

One other new feature the G80 brings to the

table is a stand alone video display engine completely designed for

this GPU generation. Set off to the left of the G80 GPU is nVIDIA's new

discrete display chip. This chip supports both TDMS logic for LCD monitors

and RAMDACs for analog displays. By moving the display engine outside the GPU,

it allows nVIDIA to have less overhead with multi-GPU videocards.

Dual SLI Connectors

for Quad SLI?

As you've probably noticed there are two SLI connectors on the top left hand corner of the MSI NX8800GTX-T2D768E-HD videocard. Only one connector is required to enable traditional SLI (with two videocards) so what's the second SLI connector for?

nVIDIA itself has been mum on the situation. nVIDIA simply states the second

connector is for potential future SLI enhancements, does that mean four

independent videocards running in SLI in the future? nVIDIA has already

demonstrated that Quad SLI is possible with its GeForce 7950GX2. ;-)

At the moment to

enable SLI, only one SLI connector is necessary and you can connect either the left or

right, it's up to you. Just make sure you use the connector

on the same side with the secondary videocard.

If you're considering running two GeForce 8800GTXs in SLI, make sure you

check out nVIDIA's Certified SLI-Ready Power Supplies list because the computer will be drawing a large amount of energy.

We'd love to test SLI on the Geforce 8800GTX, but unfortunately there

is only one card at our disposal. Next up, power consumption tests for a videocard... yup, you

read that right.