The

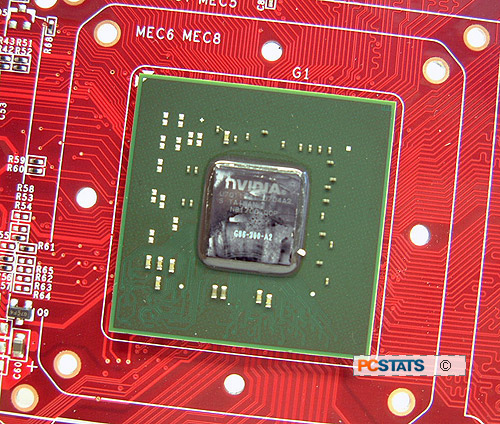

GeForce 8500GT videocard is

based on nVidia's 'G86' GPU, which is built on TSMC's 80nm manufacturing process. The

chip contains 210 million transistors. According to nVIDIA, the G86 is rated to

draw a maximum of 43W of power, well below the limit of what the PCI

Express x16 slot can provide.

The

GeForce 8500GT videocard is

based on nVidia's 'G86' GPU, which is built on TSMC's 80nm manufacturing process. The

chip contains 210 million transistors. According to nVIDIA, the G86 is rated to

draw a maximum of 43W of power, well below the limit of what the PCI

Express x16 slot can provide.

The GeForce 8500GT has a default clock speed of 450 MHz. The

difference between the nVIDIA G86 core and the G84 found in the

GeForce 8600 series is the number of stream processors available. With the GeForce 8500GT GPU, there

are only 16 stream processors while the G86 has 32 SPs and the

G80 has 96/128.

Essentially that means that the GeForce 8500GT has half the rendering power

of the GeForce 8600 series.

The nVIDIA GeForce 8500GT GPU can be equipped with either 256MB or

512MB of memory, the MSI Geforce 8500GT has 256MB. Memory runs at 800 MHz on

a 128bit memory controller bus. The relatively low memory operating frequency, teamed up

with the narrow memory bus width, tells us that high resolutions and enhanced

visual effects like Anti-Aliasing and Anisotropic Filtering are too much for this

GPU.

While the G86 core carries onward with

nVidia's current thinking of how a graphics processor should be built, there are

a few notable changes from the G80. Gone are the hard coded Vertex and pixel

shaders, they've been replaced with a more flexible Stream Processor (or unified

shader) that calculates both types of data. The Stream Processors runs

at 900 MHz incidently. Traditional core clock speeds as we know it are

dead, as several internal processors are running at different speeds.

Each Thread Processor has two groups of

eight Stream Processors, and each group talks to an exclusive texture address

filter unit as well as well as being connected to the shared L1 cache. When more

memory is needed, the Thread Processor connects to the crossbar memory

controller. nVIDIA's crossbar memory controller is broken up into two 64 bit

chunks for a total bus width of 128 bits. By moving the GPU towards a threaded

design, the nvidia G86 is much more like a processor than any graphics core of

the past. Any type of data - be it pixel, vertex, or geometry shader can be

processed within the Stream Processor. This allows load balancing to occur

between the various tasks.

nVidia PureVideo

HD:

As for miscellaneous core technologies, by default the GeForce 8500GT supports HDCP over both

DVI. With the release of the GeForce 8500GT core, nVIDIA is also updating its

PureVideo technology. Dubbed "PureVideo HD" the videocard can now handle the

entire decoding processor of HD-DVD and Blu-Ray movies. According to nVIDIA 100%

of high definition video decoding can be off loaded onto the videocard at speeds

of up to 40Mb/s.

As it is PureVideo HD will be of

benefit to Blu-Ray/HD-DVD type movies only, and does not boost WMV9 playback at

this time. That means high definition videos using the Microsoft standard will

only be accelerated to previous PureVideo levels.

Examining CPU Load with PureVideo

To test

PureVideo's High Definition accelerating capabilities on the MSI NX8500GT-TD256E

videocard, PCSTATS will play back a video downloaded from Microsoft's WMV HD Content Showcase through Windows Media

Player 10. "The Discoverers" (IMAX) video is available in both 720P and 1080P

formats. AMD processor utilization will be monitored via Task Manager.

When running the 720P version of the Discoverers video CPU usage

hovers between 15-20%.

nVIDIA's PureVideo does an excellent job at keeping CPU usage down

and here the system is running the 1080P version of the Discoverers video.

That's pretty good wouldn't you say?

Next up, PCSTATS tries its hand overclocking the MSI Geforce

8500GT videocard!