In a perfect world, we'd all be running the latest quad

core processor's with two GeForce 8800 Ultra's or a pair of Radeon HD 2900XTs

running in parallel with a stack of Western Digital Raptor's under the hood. Ahh... brings a tear to your eye doesn't it? In real life though, a system like that would cost a fortune and most of us can't afford such luxuries (as much as we might like to). Compromises must be made to a PC if you expect to keep that VISA bill under $5000 next month, and with high end videocard prices through the roof right now, we're going to have to cut back on the graphics a little... a little more... a lot?

A couple of years after its introduction, nVIDIA's SLI technology is now generally accepted by software developers and gamers alike. Most new games support SLI by default. This brings up an interesting dilemma for consumers like you and I. Should we buy a single ultra-fast videocard or save a bundle and pick up two slow video cards and run them in SLI mode?

The answer is tricky because running two identical

videocards in SLI does not double their performance. Amazing what hype can make you think eh? In theory the best you can expect from nVidia SLI is around a 40-50% increase in 3D power, and that's really not that bad at all. Does that follow through in practice? Are two videocards better than one when it comes to gaming?

We're going to do things a bit differently in this

review. Instead of focusing on high end videocards, we're going to look at what

SLI can do for mainstream users with a pair of Geforce 8500GT videocards running

in SLI. With a general retail price between $70-90 CDN, nVIDIA GeForce 8500GT

based videocards are very affordable. What PCSTATS wants to know is

whether two of these cards running in parallel is faster than say a

GeForce 8600GTS? The two GeForce 8500GT videocards that we'll be pitting

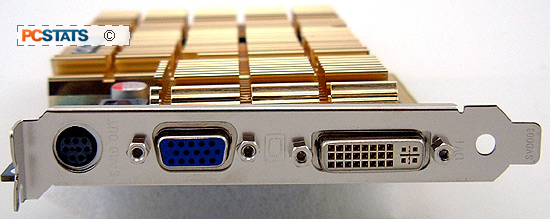

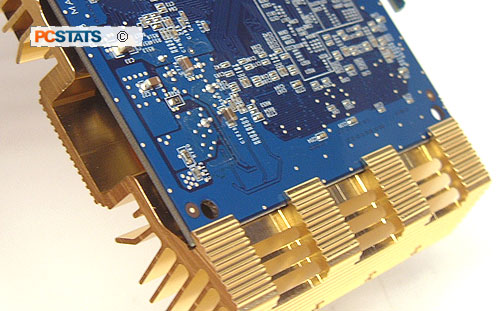

everything against are made by Gigabyte, the GV-NX85T256Hs model. Each Gigabyte

GV-NX85T256H is passively cooled and have 256MB of on board GDDR2

memory. PCSTATS individual review of the GV-NX85T256H card is posted here.

Gigabyte's GV-NX85T256H GeForce 8500GT

videocard is based on nVidia's 'G86' GPU, which is built on TSMC's 80nm

manufacturing process. The

GPU at the heart of this videocard contains a moderate 210 million transistors.

According to nVIDIA, the G86 is rated to draw a maximum of 43W of power, well

below the limit of what a PCI Express x16 slot provides.

The GeForce 8500GT GPU has a default clock speed of 450 MHz. The difference

between the nVIDIA G86 core and the G84 found in the GeForce 8600 series is the

number of stream processors available. With the GeForce 8500GT GPU, there are

only 16 stream processors while the G86 has 32 SPs and the G80 has 96/128.

The GeForce 8500GT GPU has a default clock speed of 450 MHz. The difference

between the nVIDIA G86 core and the G84 found in the GeForce 8600 series is the

number of stream processors available. With the GeForce 8500GT GPU, there are

only 16 stream processors while the G86 has 32 SPs and the G80 has 96/128.

Essentially that means that the GeForce 8500GT has half the rendering power

of the GeForce 8600 series.

The nVIDIA GeForce 8500GT GPU can be equipped with

either 256MB or 512MB of memory, this Gigabyte GV-NX85T256H Geforce 8500GT

has 256MB.

Memory runs at 800 MHz on a 128bit memory controller bus. The relatively low

memory operating frequency, teamed up with the narrow memory bus width, tells us

that high resolutions and enhanced visual effects like Anti-Aliasing and

Anisotropic Filtering are too much for this GPU.

While the G86 core carries onward with

nVidia's current thinking of how a graphics processor should be built, there are

a few notable changes from the G80. Gone are the hard coded Vertex and pixel

shaders, they've been replaced with a more flexible Stream Processor (or unified

shader) that calculates both types of data. The Stream Processors run at 900

MHz incidently. Traditional core clock speeds as we know it are dead, as several

internal processors are running at different speeds.

Each Thread Processor has two groups of

eight Stream Processors, and each group talks to an exclusive texture address

filter unit as well as well as being connected to the shared L1 cache. When more

memory is needed, the Thread Processor connects to the crossbar memory

controller. nVIDIA's crossbar memory controller is broken up into two 64 bit

chunks for a total bus width of 128 bits. By moving the GPU towards a threaded

design, the nvidia G86 is much more like a processor than any graphics core of

the past. Any type of data - be it pixel, vertex, or geometry shader can be

processed within the Stream Processor. This allows load balancing to occur

between the various tasks.

Okay, now that we have all the background out of the way lets move forward

and find out if two Gigabyte GV-NX85T256H graphics cards running in

SLI are really better than one Geforce 8600GTS. The individual review of

the GV-NX85T256H videocard is posted here.