nVidia GeForce4 Technology Preview

Yes,

it's that time of year again! nVidia have just announced their newest GPU - the

GeForce4. Actually, console gamers had a taste of what the NV25 was going to be like

since the Microsoft's Xbox is using a variant of that same chipset the NV2A. Now

it's our turn!

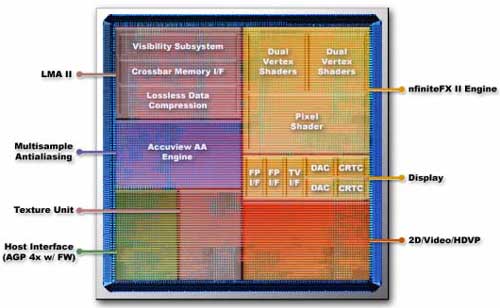

GeForce4 Ti

Series:

Unlike the name suggests,

the GeForce4 Ti's are not a totally new GPU architecture, but built on

the same .15 micron technology at the GF3 so the y are more of a extension of current

GeForce3 technology. With 63 million transistors on the GF4, that's only 6

million more then the 57 million the GeForce3 had, however there have been some

advancements in the technology.

First, the GeForce 4 has a second Vertex Shader.

With this, the GeForce4 can pump out more polygons and perhaps this will

allow for game play with graphics as good as those in cinematics!

The Light Speed Memory Architecture (LMA)

that was present in the GeForce3 has been upgraded as well,

with it's major advancements in what nVidia calls Quad Cache. Quad Cache includes a

Vertex Cache, Primitive Cache, Texture Cache and Pixel Caches. With similar functions as caches on CPU's,

these are specific, they store what exactly they say.

nVidia was very tight lipped on the size

and how they differ from the ones on the GeForce3 GPU saying nothing more

than they were tweaked for more performance.

Probably the biggest improvement

to the LMAII are the tweaks nVidia has done to the Z-occlusion

culling. Since most cards suffer from a great amount of overdraw (pixels

are rendered in a scene even though they're not visible), nVidia greatly tweaked

the Z-occlusion. What this does is, the GPU will sense what pixels will and will

not be rendered and those parts that will not be rendered won't, thus saving the

bandwidth for other parts of the scene.

Antialiasing has been standard feature for a little while

now, however the problem was, enabling it would often render a game unplayable

unless it was used at lower resolutions such as 640x480 or 800x600. With the

introduction of the GeForce3 and it's much more efficient memory controller Quincunx AA was finally a viable option at

higher resolutions.

One problem AA has always faced was

that the image would often be quite blurry as a result. This is one of the

downfalls of current forms of AA, and nVidia has tried to solve this problem by

introducing Accuview Antialaising. What this changes is the reference pixel

for AA is no longer on the edge of the jagged line, instead it's been moved in a

little inside to try and improve the image quality by not having all the edges

blurry. Please bear in mind, we do not have a reference GeForce4

videocard at the moment, so when we receive a test sample, we'll show

pictures about what this actually looks like.

Also new to the GeForce4 is

nView. While not really totally new, nView is nVidia's name for the dual

display capabilities. Unlike their attempts in the past with TwinView, the

GeForce4 has dual 350 MHz RAMDAC's to ensuring crystal clear displays up to

1600x1200 on two monitors.

Below are the different configurations

of the GeForce4 Ti lineup.

|

Name |

GeForce4 Ti4600 |

GeForce4 Ti4400 |

GeForce4 Ti4200* |

|

Core Size |

.15m |

.15m |

.15m |

|

Core Speed |

300 MHz Core |

275 MHz Core |

225 MHz Core |

|

Memory Speed |

600 MHz Mem |

550 MHz Mem |

500 MHz Mem |

|

Memory Width |

256 bit SDR (128 bit

DDR) |

256 bit SDR (128 bit

DDR) |

256 bit SDR (128 bit

DDR) |

|

Memory Size |

128 MB |

128 MB |

128 MB |

|

Memory Bandwidth |

10.4 GB/s |

8.8 GB/s |

8 GB/s |

*To be confirmed

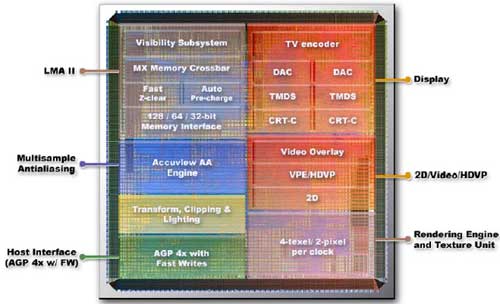

GeForce4 MX Series:

The name GeForce4 MX is a little misleading. Rather then

being based on the GeForce3/4, the GeForce4 MX is actually based

on the older GeForce2 MX engine which is also built on .15 micron technology.

The GeForce4 MX does have a few enhancements to the GPU such as a stripped

down version of the LMAII and a limited vertex shader incorporated into the core.

No pixel shader though.

The GeForce4 MX also has Accuview AA,

however that's more for a checklist then anything else, the GeForce4 MX is not

really powerful enough to use AA in most games. Like the GeForce4 Ti, the

GeForce4 MX also includes nView which is quite an upgrade from the TwinView

present in current GeForce2 MX's.

|

Name |

GeForce4 MX460 |

GeForce4 MX440 |

GeForce4

MX420 |

|

Core Size |

.15m |

.15m |

.15m |

|

Core Speed |

300 MHz

Core |

275 MHz

Core |

250 MHz

Core |

|

Memory Speed |

550 MHz

Mem |

400 MHz Mem |

166 MHz

Mem |

|

Memory Width |

256 bit SDR (128 bit

DDR) |

256 bit SDR (128 bit

DDR) |

|

|

Memory Size |

64 MB |

64 MB |

64 MB |

|

Memory Bandwidth |

8.8 GB/s |

6.4 GB/s |

2.7 GB/s |

As we can see, the GeForce4 MX460 and MX440 don't suffer

from the same problem that the original GeForce2 MX's did, namely the lack of

memory bandwidth. The exception is the MX420 uses SDRRAM so the card is likely

going to be used by OEM's because of it's official GeForce4 name and price.