Gigabyte GV-NX66T128D GeForce 6600GT Videocard Review

nVIDIA was the first to enter the 'mainstream'

PCI Express video market with the GeForce 6600 class GPU. ATi followed suit a few days later with its Radeon X700.

While the companies are different, the technology behind the competing VPUs is actually quite

similar. Built on IBM's 0.11 micron manufacturing process,

the nVidia GeForce 6600 has eight pixel rendering pipelines and the 'GT' model has a core

clock speed of 500 MHz. ATi uses TSMC's 0.11 micron manufacturing process, and its graphics core has eight pixel rendering pipelines too. The higher-end Radeon X700 XT VPU is clocked slightly slower than nVidia's solution, at 475

MHz.

Where each videocards' memory is concerned, things are the other way around. ATi's Radeon X700 XT clocks its memory at 1.05 GHz - the nVIDIA solution at an even 1 GHz. Both cards are equipped with a 128-bit memory controller, and

as you can imagine, both cards stack up against each other very well on paper.

The question is, just how well do they compare in the real

world?

Over the

next dozen pages, PCStats will try to answer that question as we test out a

videocard made by Gigabyte

called the GV-NX66T128D.

This PCI Express x16 videocard is based on nVIDIA's GeForce 6600GT GPU, and packs in a svelte

128MB of Samsung GDDR3 memory.

On the accessory front, the Gigabyte GV-NX66T128D includes a DVI/VGA converter and a Component/S-Video break

out box. The breakout box is adequate, but sadly not as nice as the one included

with the GV-RX70P256V videocard. Gigabyte does a pretty good job

with software; given the end user copies of PowerDVD 5 and

PowerCreator 3, as well as full versions of "Joint Operations" and "Thief: Deadly

Shadows."

The Gigabyte

GV-NX66T128D is only compatible with PCI Express x16 slots, so those of you

with AGP-based systems will have to get your fix elsewhere. If you're holding out for a

motherboard that has two PCI Express x16 slots, you'll be happy to

know the GV-NX66T128D is SLI compatible as well. While consumer SLI motherboards are not yet

widely available, we do expect to see them early next year.

On the top left side of the card you

can see the small SLI connector for connecting to another identical videocard.

On the top right-hand corner is a silk screen for a 12V power connector, but it's

not necessary as the PCI Express bus can provide enough power.

The GV-NX66T128D requires an active cooling solution to keep

the core running properly, and throughout testing the fan was extremely quiet. With its GDDR3 memory running at 1GHz,

the DRAM still operates cool enough that RAMsinks are not required.

The GV-NX66T128D requires an active cooling solution to keep

the core running properly, and throughout testing the fan was extremely quiet. With its GDDR3 memory running at 1GHz,

the DRAM still operates cool enough that RAMsinks are not required.

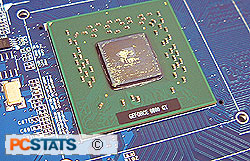

As you can see from the picture

on the left, the nVidia GeForce 6600GT uses FCBGA packaging. For any user seeking to replace the stock

cooler with an after-market solution, use a bit of caution. The silicon core is itself very fragile and

chips easily; with nothing to protect it, it's very possible to crush the core completely (like the first

Socket A Athlons). Next, the resistors/capacitors on the green substrate are brittle and it doesn't take a

lot of force to knock one off. Killing a videocard is never

fun.

The software package that Gigabyte bundles with the GV-NX66T128D

videocard is not the largest, but strikes a good balance between bonus software and

overall cost. If Gigabyte were to include more software the price would likely have

to increase. In any case, both games (Joint Operations, Thief: Deadly Shadows) are fairly

new titles that will keep you busy for a good few weekends and show off

the card's capabilities well.

The

breakout box that comes with

the GV-NX66T128D is a bit more basic than the one that came

with the last Gigabyte videocard we reviewed, and we're not as enthusiastic

about it. Considering that this card only supports TV-Output I guess that's not too

surprising. Ports for both S-Video output and component out are present, meaning that the GV-NX66T128D

can connect to your regular TV as well as an HDTV capable set!